Ditching the Cloud: Why You Should Consider Running Your Own Local Chatbot Today (And 4 Compelling Reasons)

Ditching the Cloud: Why You Should Consider Running Your Own Local Chatbot Today (And 4 Compelling Reasons)

Key Takeaways

- Local chatbots are more secure and private compared to cloud-based ones that record, share, and analyze conversations for profit.

- Local chatbots can be customized to suit your preferences, unlike commercial ones that may struggle to adapt to different commands over the course of a conversation.

- Local chatbots can be used offline and save you money on costly monthly subscriptions.

Few people run chatbots on their own machines because cloud-based chatbots like ChatGPT, Claude, and other AIs seem good enough. However, in my experience, there are great reasons to run local chatbots on your own computer.

1 Enhanced Privacy

When I started running a chatbot on my PC, I felt free to carry more personal conversations compared to commercial options like ChatGPT or Gemini. Those chatbots, at least by default, record and analyze every single conversation you have with them.

In contrast, locally-run chatbots, provide enhanced privacy because they run entirely on your personal hardware without sending data back to a central server or computer. This means you retain full control over your data, making it more likely that your conversations remain private and secure.

However, it’s important to note that not all local chatbots guarantee high levels of privacy. Open-source chatbots are the best option for privacy because you can review the code behind the bots to see that your data is not being sent or copied to anywhere other than your machine. Just because you’re running a chatbot locally and don’t need to be online all the time, doesn’t eliminate the possibility that it will send info back home once you’re connected again!

2 Cost Savings

Commercial chatbots are not made to make your life easier, regardless of what their companies say. They have been designed to make money. For example, OpenAI may be non-profit, but it eventually established a for-profit wing. Additionally, its leading rivals like Character.ai and Claude.ai have been for-profit since their start.

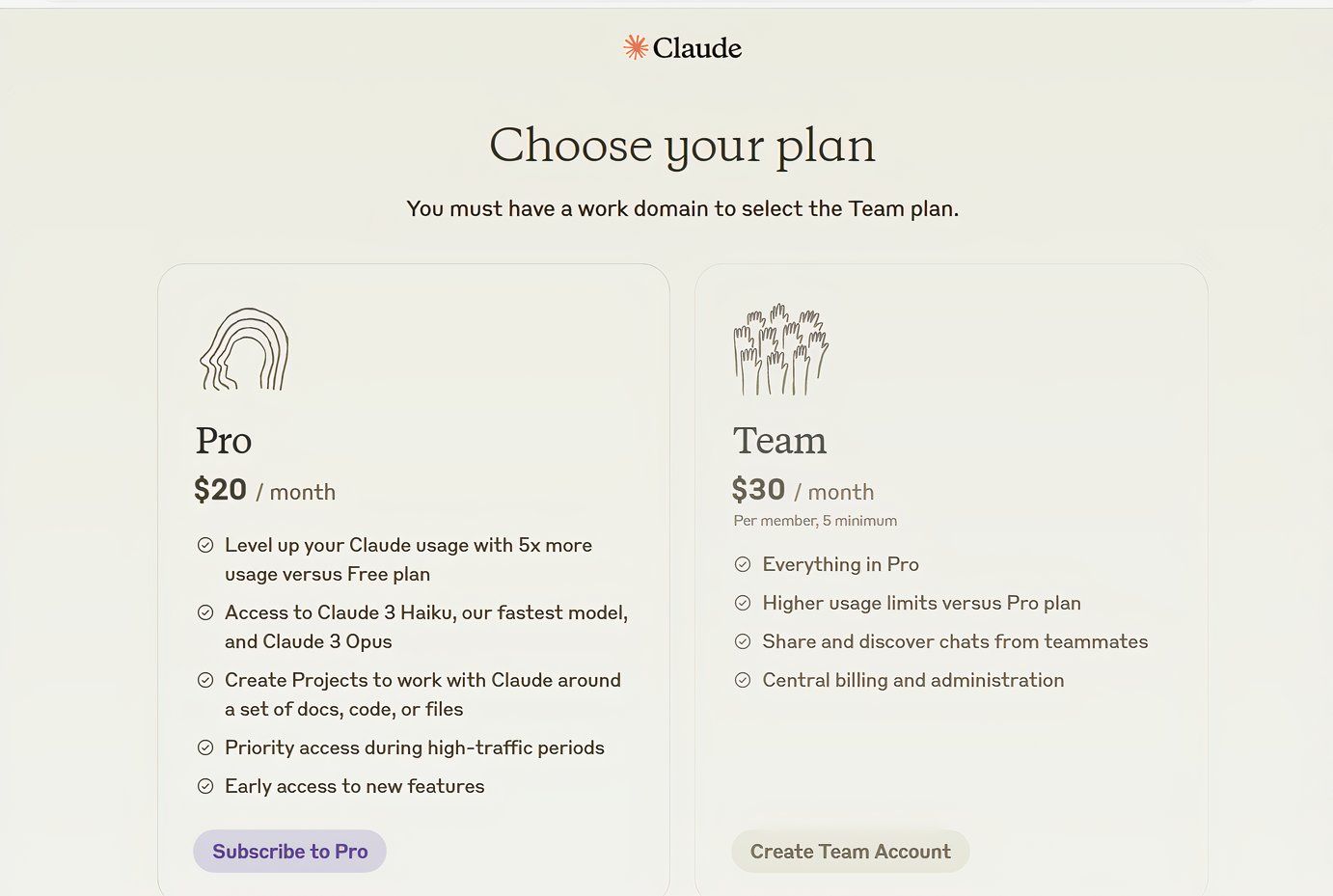

The pay model these companies have all opted for is subscription-based, most are $20 a month, like ChatGPT and Claude. In today’s economy, $20 bucks a month is a lot over time. If you subscribe to multiple companies, you will pay close to $500 a year or more for chatbots.

Local chatbots can help you save on those costs. If you are a heavy chatbot user, local chatbots could save you from subscribing to multiple companies. If you don’t use them that often, local bots are an even wiser investment.

Even if all you have is a laptop with four to eight gigabytes of RAM , you can still enjoy saving money on chatbots by utilizing low-RAM large language models, which can run efficiently on modest hardware setups.

3 Offline Capabilities

Another great plus to running your own local chatbot is that you can use it offline. This was handy for me a few months ago when I was flying. I didn’t download any movies or bring a book, but I had a DistilBERT model on my gaming laptop.

I was able to have some exciting conversations with an AI while flying at 35,000 feet! Remember that running local models can drain your battery at the same rate as an intense PC game. So it’s a good thing that it was a quick flight!

Regardless, using a chatbot without the internet is a big advantage during service outages or when you want to be disconnected from the World Wide Web but still use the latest AI technology.

4 Improved Latency

Local chatbots will always have less training data than the big commercial players. However, they also have much faster response times or improved latency, if you are using the right model for your hardware. This is in-part because you are not sharing the AI with anyone else. When you interact with a commercial chatbot, your input has to travel over the internet to the company’s servers, be processed, and then sent back to you. This round-trip can introduce noticeable delays, particularly during peak usage times or if there are network issues.

A local chatbot processes everything on your machine, resulting in much faster response times. The AI is dedicated solely to you, without any resource sharing, which means there are no other users competing for its attention. As a result, local chatbots can provide consistently fast and reliable responses, outperforming commercial AIs on the web or through a phone app.

Running a chatbot on my PC was a fantastic learning opportunity. I gained hands-on experience with AI and machine learning by setting up, configuring, and training the chatbot myself. You can easily do the same and enjoy chatbots with more privacy, cost savings, and improved latency. Try it today!

Also read:

- [New] How to Share Screen on Google Meet for 2024

- [New] In 2024, Best Mobile Photography Tools Reviewed IPhone & Android Comparisons

- 1. How to Install and Use the Xvid Codec for Enhanced Video Playback on VLC Media Player

- Beta Access Now Available for Unity's Support on Apple Vision Pro - Kickstart Your AR/VR Gaming Experience!

- Daylong Experience with the Apple Vision Pro: Is It Enough for Intensive Work?

- Dislike Using Apple Vision Pro's Virtual Keyboard? Discover Your Guide to Switching to an Actual Physical Keyboard

- Exploring Matterport's Latest Innovations: An In-Depth ZDNet Evaluation

- How to Use Google Assistant on Your Lock Screen Of Samsung Galaxy S23+ Phone

- Revitalizing Your USB-to-Serial Adapter's Communication Pathway Software

- Troubleshooting OBS Blackouts on Game Recordings

- Title: Ditching the Cloud: Why You Should Consider Running Your Own Local Chatbot Today (And 4 Compelling Reasons)

- Author: James

- Created at : 2024-12-30 16:30:55

- Updated at : 2025-01-04 16:36:04

- Link: https://technical-tips.techidaily.com/ditching-the-cloud-why-you-should-consider-running-your-own-local-chatbot-today-and-4-compelling-reasons/

- License: This work is licensed under CC BY-NC-SA 4.0.